Pentagon’s Groundbreaking Test: U.S. Fighter Pilots Take Directions from AI in First-of-Its-Kind Aerial Exercise

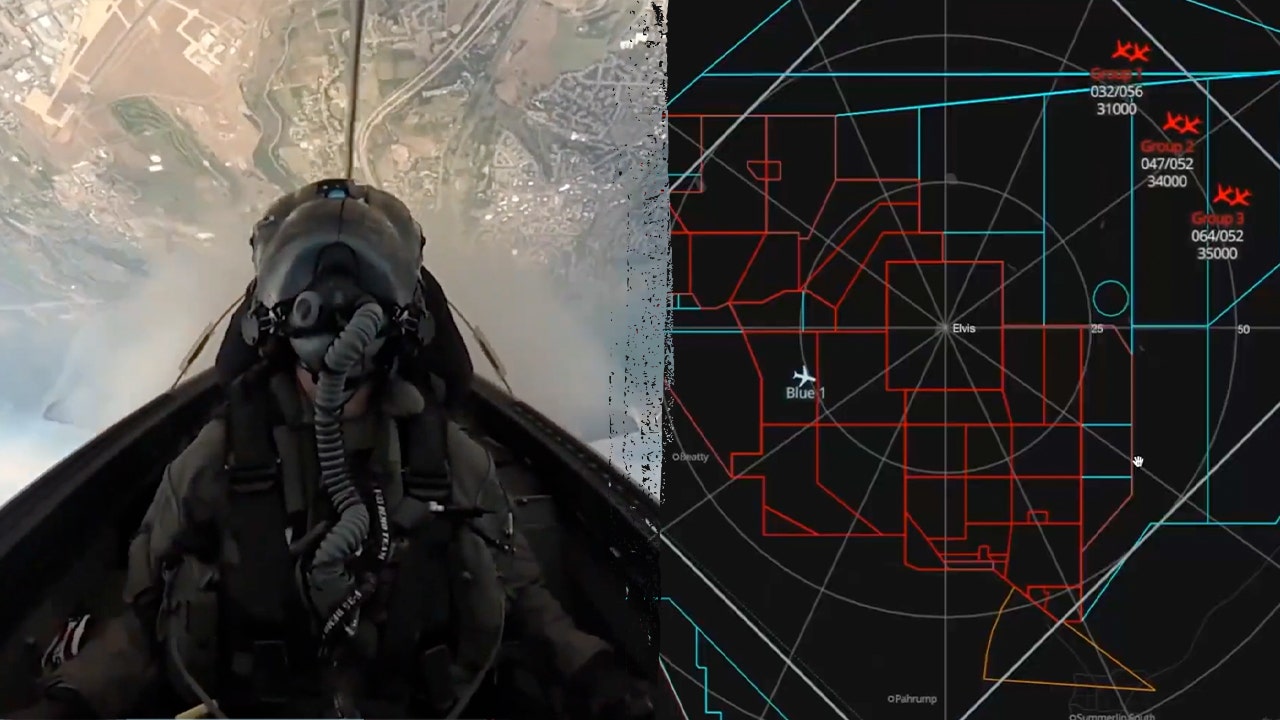

Washington, D.C. – August 30, 2025 – In a pioneering step toward revolutionizing aerial warfare, the U.S. Pentagon has successfully tested fighter pilots receiving real-time tactical directions from an artificial intelligence system for the first time. The August 2025 exercise, conducted jointly by the Air Force and Navy, integrated Raft AI’s innovative Starsage tactical control system into live flights involving F-16s, F/A-18s, and F-35s. This milestone marks a shift from traditional human-led air battle management to AI-assisted decision-making, potentially transforming how combat missions are executed by providing pilots with instantaneous threat assessments and positioning guidance.

The test, part of a broader joint military exercise evaluating advanced weapons, communications, and battle management platforms, simulated high-stakes scenarios where AI acted as an “air battle manager.” In conventional operations, pilots rely on ground-based human controllers who analyze radar, sensor data, and intelligence to direct aircraft positioning and responses. During the trial, Starsage stepped into this role, cross-referencing pilots’ reports with sensor feeds and the Air Tasking Order to confirm mission progress and issue “picture calls”—snapshots of enemy formations. In one simulated engagement, the AI identified a group of five adversary aircraft, delivering real-time tactical awareness that would typically take minutes to process manually.

Raft AI CEO Shubhi Mishra hailed the test as “groundbreaking,” noting in an interview that no adversaries have demonstrated similar technology. “We haven’t seen our enemies test any similar technology,” Mishra emphasized, highlighting the system’s ability to reduce response times from minutes to seconds. Starsage provides one-on-one support to each pilot, unlike human managers who juggle multiple aircraft, enabling faster and more accurate decisions in dynamic environments.

How the Test Unfolded: From Simulation to Skies

The exercise began with pilots querying Starsage for mission verification, where the AI analyzed feeds to ensure required assets were airborne. As scenarios escalated, pilots requested threat assessments, prompting Starsage to issue precise alerts. A human battle manager oversaw the proceedings, allowing pilots to override AI directives if needed, ensuring safety and maintaining human oversight. The system was seamlessly integrated across the F-16, F/A-18, and F-35 platforms, demonstrating compatibility with existing U.S. fighter fleets.

This test builds on prior AI aviation advancements, such as DARPA’s Air Combat Evolution (ACE) program, which in 2023-2024 conducted dogfights between AI-controlled X-62A VISTA jets and human-piloted F-16s at Edwards Air Force Base. Those flights, totaling 21 sorties, focused on autonomous maneuvering and safety protocols like collision avoidance. However, the August 2025 trial uniquely emphasized AI as a tactical director rather than a full autopilot, addressing the human-AI collaboration essential for complex air battles.

Mishra stressed ethical boundaries: “If it’s a life-or-death decision, humans should always be in the loop.” While the technology is ready, she noted the broader question: “The question is, do we let it?” This aligns with Pentagon debates on the future of manned fighters, as leaders like Air Force Secretary Frank Kendall explore AI’s role in reducing cockpit needs and enhancing unmanned systems like Collaborative Combat Aircraft (CCA).

Implications for Future Warfare and the AI Arms Race

The successful integration of Starsage could accelerate the U.S. military’s adoption of AI in combat, offering advantages in speed and precision amid rising global tensions. In an era of AI arms races—particularly with China weaponizing drones, code, and biotech—the test positions the U.S. ahead in tactical AI applications. Mishra suggested Starsage could even extend to civilian uses, like preventing mid-air collisions, referencing a 2025 incident near Reagan National Airport.

Critics and experts, however, raise concerns about over-reliance on AI, including ethical issues around autonomous lethal decisions and potential vulnerabilities to hacking or jamming. Arms control advocates worry about escalating conflicts, while proponents argue it mitigates risks to human pilots and counters manpower shortages. As the Pentagon eyes full deployment, this test underscores AI’s transformative potential: faster decisions, fewer errors, and a redefined battlefield where humans and machines collaborate seamlessly.

Social media reactions on X have been abuzz, with users debating the ethics and excitement of AI-piloted skies. One post quipped, “AI directing fighter jets? Skynet is here, but for good this time.” As further evaluations continue, the August test signals a new chapter in military aviation, where AI isn’t just a tool—it’s a co-pilot in the fight for air superiority.

Sources: Fox News, Semafor, DefenseScoop, AP News, The Defense Post, X Posts